The third major part of the synthesis process is the generation of the waveform itself from some fully specified form. In general this will be a string of segments (phonemes) with durations, and an F0 contour.

There are three major types of synthesis techniques

We will only talk about concatenative synthesis here. many of the more practical aspects of waveform synthesis are discussed in the Festival Document (http://festvox.org). That document includes detailed instructions and code for building new diphone synthesizers

Concatenating phonemes has the problem that all the joins happen at what is probably the least stable part of the waveform: the part changing from one phone to the next. Concatenating diphones (middle of one phone to middle of the next) means joins (may) happen in a more stable part of the signal. This effectively squares the number of units required but this can still be feasible. This number phones required for different languages various significantly. For example Japanese has around 25 phones giving rise to about 625 diphones while English has around 40 phonemes giving rise to about 1600 diphones.

Of course not all diphones are really the same and it has been shown that selecting consonant clusters can be better than strictly diphones. Most "diphone" synthesizers today include some commonly occurring triphone and nphone clusters.

As an inventory containing single occurrences of diphones will not provide the desired prosodic variation, the diphones are modified by some form of signal processing to get the desired target prosody.

There are two methods in collecting diphones. The diphones may be extracted from a set of nonsense words or from a set of natural words.

Generating nonsense words that provided diphone coverage in a language is relatively easy. A simple set of carrier words is constructed and each phone-phone combination is inserted. For example qw might use the carrier

pau t aa C V t aa pau

where each consonaant and vowel is inserted. Typically we need slightly different carriers for the different combinations, consonant-vowel, vowel-consonant, vowel-vowel, consonant-consonant. We also need carriers for silence-phone and phone-silence too.

In most languages there are additional phones (or allophones) which should be included in the list of diphones. In some dialects of English /l/ has significantly different properties when in onset positions compared with coda position (light vs dark L). In US English flaps are common as a reduced form on /t/. These require there own carrier words and a concrete definition of where they do and do not occur (which may vary between particular speakers).

Much less specific consonant clusters should also be considered and

given their own diphone. In our English voices we typical include both

an s-t diphone for the syllable boundary case (as in class

tie as well as a s_-_t diphone for use of st in a

consonant cluster (e.g. mast). Some other diphone systems

introduced "diphones" for longer units thus instead of have a specail

type of s for use in consonant clusters they introduce a new

pseudo-phone st.

The addition of extra diphones over and above the basic standard is a judgement based on the effect it will have synthesis quality as well as the amount of extra diphone it will introduce. As the speaker will tire over time it is not possible to simple add new allophones and still have a manageable diphone set (e.g. adding stress vowels will almost double the number of diphones in your list).

As we stated there are two ways to collect diphones, first as described above from nonsense words and the second is from a list of natural words. Most of the points above apply to either technique. As yet there is no conclusive prrof that either technique is better than the other as it would require careful recording of both types in the same environment. As recording, labelling and tuning is expensive from a resource point of view few people are willing to test both methods but it is interesting to point out the basic advantages and disadvantages of each.

The nonsense word technique as the advantage of being simpler to ensure coverage. Words are specified as phone strings so you know what exactly how the words should be pronounced. The main disadvantage of the nonsense word technique is that ther eis a loss of naturalness in their delivery. The nonsense words are unfamiliar to the speaker and there for are produced as natural and real words would be. This is probably one factor that makes diphone synthesizers appear to lack a certain amount of naturalness. Though the main factor is probably that the speaker must deliver some 1500 words is such a controlled environment that its is not surprising that the resulting voices sound bored.

The natural word technique has the possibility of provide more natural transition. There are problmes though, its is difficult to prompt a user to pronounce those words in the intended form. If flaps are required (or not required) it can be harder to coach the speaker to say the intend phone string. Also because the list is of natural words the speaker will say them in a more relaxed style which may make the overall quality less consistent and therefore less stiable for concatenation and prosodic modification. It is also harder to find natural words that are both reasonably familar, have standard pronunciations and properly cover the space, especially when working in new languages. But the relative merits of these techniques have not really been fully investigated.

In more recent collections of diphone databases, following OGI's method, we currently recommend that diphones (at least as nonsense words) are delivered at as near constant articulation as possible. Thus they are recorded as a monotone with near fixed durations and near constant power. There reasoning behind this is that as there segments are to be joined with other segments we wish them to be as similar as they can be to minimise distortion at join points.

There are ideals for a recording environment, an anechoic chamber, studio quality recording equipment, and EGG signal and a professional speaker. But in reality this ideal is rarely reached. In general we usually settle for a quiet room (no computers, or air conditioning), and a pc or laptop. A headmounted microphone is very important and so cheap to provide it is not worth giving up that requirement. The problem without an headmounted mike, the speaker's position may move from the (handheld or desk mounted) microphone and that change introduces acoustic difference that would be better to avoid.

Laptops are relatively good for recording as they can be taken to quiet places. Also as power supplies and CRT monitors are particularly (electrically) noisey a laptop ran from batteries is likely ot be a quieter machine for recording. however you also need to take into account that the audio quality on laptops is more variable than on desktops (also it is much easier and practical to change the sound board on a desktop than on a laptop. Although machines do always generate electrical noise, the advantage of using a computer to directly record utterances into the appropriate files is not to be under estimated. Previously we have recorded to DAT tape and later downladed that tape to a machine. The processes of downloading and segmenting into the appropriate files is quite substantial (at least a number of days work). Give how much of the rest of the process is automatic that transfer was by far the most substantial part and the benefits of a better recording may not in fact be worth it.

However the choice of off-line recording should be weighed properly. If you are going to invest six months in a high-quality voice its probably worth the few extra days work, while if you just need a reasonable quality voice to build a synthesizer for next week recording directly to machine after ensuring you are getting optimal quality is probably worth it. But in using a macine to record it is still paramount to ensure a headmounded microphones and proper audio settings to minimise channel noise.

Once diphone recording have been made the next task is to label them. In the case of nonsense words labelling is much easier than phonetic labelling of continuous speech. The nonsense words are defined as string of particular phones, and hence no decision about what has been said it necessary only where the phone start and end is required. However it is possible that in spite careful planning and listening the speaker has pronounced the nonsense word wrongly and that should be checked for but this is relatively rare.

In earlier cases we would hand labelled the words. As stated this isn't too difficult and mostly just a laborious task. However following malfrere97, we have taken to autolabelling the nonsense words. Specifically we use synthesized prompts and time align generated cepstrum vectors with the spoken form. The results from this are very good. Most human labellers make mistakes and hence even with human labeller multi-passes with corrections are required. The autolabeller method actually produces something better than an initial human labeller and takes around 30 minutes as opposed to 20-30 hours.

Even when you are collecting a new language for which prompts in that language cannot be generated the autolabelling technique is still better than hand labelling. In the nonsense word case the phone string is very well defined and it can be alighnment to even an approximation of the native pronunciation. For example we took a Korean diphone databases and generated prompts in English. Even though Korean has a number of phones for which ther eis no equivalent in English (asperated verses unasperated stops) the alignment works sufficiently well to be practical. Remember the alignment doesn't need to detect the difference between phones only how best can an existing phones list be aligned to another.

However although the autolabelling processing is good it still benefits for hand correction. In general the autolabelling will mostly get the whole word correct but occasionally (less than 5% of the time) the whole word is wrongly labelled. Often the reason can be identified such as a lipsmack causes a /t/ to be anchored in the wrong place. But other times there is no obivous reason for the error. In these cases hand corection of the labels is required.

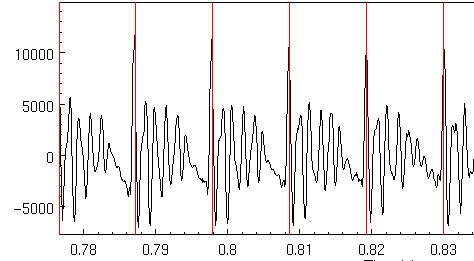

It would be good if we could automatically identify where the bad labels are so they can be presented for correction. This isn't as self-referential as it first appears. Although we've not released the tools to do this we've made initial investigations of techniques that can detect when labelled phones are unsual. The simplest technique is to find all phones whose duration is more than three standard deviations from the mean. This often points to erroneously labelled utterance. Secondly we note that findi difference in the power and frequency domain poarameters of phones outside a 3 standard deviation range also indetify potential problems.

Irrespective of the labelling trechnique what we require is finding phone bounraries with an acuracy of about 10ms, though actually up 20ms may be sufficient. More importantly though we need to know when the stable part of phones are so we can place the diphone boundary. In the autolabelling case we can include the known diphone boundary (stable point) that exists in the prompt in the label file, thus autolabelling gives not only the phone boundaries but also the stable part as identified in the prompt.

When no boundaries are explicitly available (e.g. when cross language alignment is done or labels are pre-existing), we use the following rules:

Note these are guessed, they are not optimal. In you invetsigate the best diphone synthesizers out there you will find that the stable points have all been hand labelled, very carefully by looking at spectragrams and linstening to joins. Such careful work does improve the quality. In fact most of the auto-labelled voices we have done included a fair amount of careful labelling of stable points (though we now benefit from that when re-labelling using these dbs as prompts).

Rather than using hueristics more specific measurements for finding the optimal join point can be done. conkie96 descirbes a method for find optimal join poitns by measures the cepstral distance as potential join points. Either using the best (smallest distance) for each possible join or an average best place can be selected. Such a technique is used at run time in the unit selection techniques discussed below.

Although selecting diphones will give a fair range of phonetic variation it will not give the full prosodic or even pectral range that is really desired. If we could select longer units, or more appropriate units for a database of speech the resulting concatenated forms will not only be better they will require less signal processing to correct them to the targets (Signal processing introduces distortion so minimising its use is usually a good idea). We could use finer detailed phonetic classification: such as stress v. unstressed vowels thus increasing the size of our inventory explicitly.

Sagisaka, (nuutalk92) used a technique to select non-uniform units from a database of isolated words. These are called non-uniform because the selected units may may be phone, diphone, triphones or even longer. Thus the inventory contained many examples of each phone and acoustic measures were used to find the most appropriate units. In many cases multi-phone units would be selected especially for common phonetic clusters.

This work was only for Japanese, but had specifically excluded any prosodic measures in selection (namely duration and pitch), even though most people agreed that such features would be relevant. Further work in Japan extended the ideas of nuutalk92 and campbell92b, to develop a general unit selection algorithm that found the most appropriate units based on any (prosodic, phonetic or acoustic) features black95d, implemented in the CHATR system.

hunt96 further formalised this algorithm relating it to standard speech recognition algorithms and adding a computationally efficient method for training weightings for features. Other techniques for selecting appropriate units are appearing.

donovan95 offers a method for clustering examples units to find the most prototypical one. black97b also uses a clustering algorithm but like hunt96 then finds the best path through a number of candidates. Microsoft's Whistler synthesizer also uses a cluster algorithm to find the best example of units (huang97).

In the following sections we will particularly describe the work of hunt96, black97b and taylor99b as three typical selection algorithms. They also are the ones we are most familiar with. hunt96 was implemented in the CHATR speech synthesis system from ATR, Japan. As the hunt96 based selection algorithm is proprietary no implemention is available in festival but an implementation of black97b is freely available.

What we need to do is find which unit in the database is the best to synthesize some target segment. But this introduces the question of what "best" means. It is obviously something to do with closest phonetic, acoustic and prosodic context but what we need is an actual measure that given a candidate unit we can automatically score how good it is.

The right way to do this is to play it to a large number of humans and get them to score how good it is. This unfortunately is impractical, as there may be many candidates in a database and playing each candidate for each target (and all combinations of them) would take too long (and the humans would give up and go home). Instead we must have a signal processing measure which reflects what humans perceive as good synthesis.

Specifically we want a function that measures how well a given a unit, can be inserted into a particular waveform context. What we can do is set up a psycho-linguistic experiment to evaluate some examples so that we have a human measure of appropriateness. Then we can test various signal processing based distance measures to see how they correlate with the human view.

hunt96 propose a cepstral based distance, plus F0 and a factor for duration difference as an acoustic measure. This was partly chosen through experiments, but also by experience and refinement. It is this acoustic cost that will be used in define "best" when comparing the suitability of a candidate unit with a target unit. black97b use a similar distance but include delta cepstrum too. donovan95 use HMMs to define acoustic distances between candidate units.

During synthesis, of course, we do not have the waveform of the example we wish to synthesize (if we did we wouldn't need to synthesize it). What we do have is the information in the target that we must match with the information in the unit in the database. Specifically the target features will only contain information that we can predict during the synthesis process. This (probably) restricts it to phonetic and prosodic features to

Thus excluding spectral properties.

We do have the spectral properties of the candidate units in the database, but can't use them as the targets don't have spectral information to match to. We can however derive the phonetic and prosodic properties of the candidate units so we can use these to find a cost.

Specifically, both target units and candidate units are labelled with the same set of features. For each feature we can defined a distance measure (e.g. absolute, equal/non-equal, square difference). And we can define the target cost as a weighted sum of difference of features.

Of course this is not the same as the acoustic cost nor do we know what the weights should be for each feature. But we can provide a training method which solves that problem.

Experiments with presenting pitch, duration etc in different domains (e.g. log domain) are worthwhile as we do yet know the best way to represent this information.

As stated above we define the target cost to be the weighted sum of differences of the defined features, and we wish that the target cost is correlated with the acoustic cost.

If we consider the special case where we compare known examples from the database we are synthesizing from: in this case we have the target features and the candidate features but also we can calculate the acoustic cost.

As we have in this "resynthsis case" both the acoustic cost and the target and candidate features the only thing missing is the weights for the feature distance. We can thus use standard techniques to estimate the weights.

Weights are found for groups of phones, e.g. high vowels, nasals, stops etc.

For each occurrence of a unit in the database find its acoustic distance with all other occurrences (of the same type). Also find its target cost distances for each feature. This will produced a large table of acoustic cost and list of features distances of the form

AC = W0 d0 + W1 d1 + W2 d2 ...

That only the Wn are unknown. We can then estimate Wn for the class of units using linear regression.

While hunt96 finds appropriate candidates by measuring the target cost at synthesis time, black97b effectively moves that costing into the training processing.

Similar to donovan95, black97b clusters the occurrences of units into clusters of around 10 to 15 examples. Clustering is done to minimise the acoustic distance between members using the acoustic distance defined in the the previous section. The clusters are found by partitioning the data based on questions about features that described units. Theses features are only those that are available at synthesize time and that are available on target units. Such features include phonetic context, prosodic context, position in syllable, position of syllable in word and phrase etc. The same sorts of features that are used in target costs for hunt96. The target cost in this case is the distance of particular unit to its cluster centre.

In this case the units are sorted into clusters during training rather than measured at synthesis time offering a more efficient selection of candidate units.

In later version of this work actual diphones are selected rather than full phones. This leads to better classification, and better synthesis.

In both the above techniques selection is based on a per phone (or diphone) basic. This may not be optimal as if there are longer segments that are apropriate they will only be found if the appropriate target costs (or cluster distances) are set up. In PSM a more explicit method is used to find appropriate longer units. In this case the database used for sleection is labelled with a tree based representation.

Synthesis requires matching a tree presentation of the desired utterance against the databases. This is done top down looking for the best set of subtrees that can be combinded into the full new utterance. The matches move furtehr and further down the trees looking for a reasonable number of alternatives (say 5) if such a number is not founc matches are attempted and the next level down, until phone level matches are made.

Specifically this technique has the advantage of finding longer matches with less combutation (and dependence of target costs of adjacent phones in the database being similarly scaled. Also as this tree method, although it may include various features, can primarly be done with phones (and context, stress levels, accents syllable etc. This is a much higher level of presnetation. The above two method depend on the prediction of duration and F0 which we know to be a difficult thing to predict (and also there are more than one solution). By not using explcit prosody values into selection prosody will (it is hoped) fall out of the selection process.

The problem is not just to find a candidate unit which is close to the target unit. It must also be the case that the candidate units we select join together well. Unlike in finding the target cost, this time we have the waveform of the units we are to join, so we can use spectral information in finding the distances. Again after experiment, and some experience we can use

Again we can use a weighted sum of differences to provide a continuity cost.

We can actually use this measure to not just tell us the cost of joining any two candidate units. We can also use this measure for optimal coupling (conkie96). This means we can also find the best place to join the two units. We vary the edges within a small amount to find the best place for the join. This technique allows for different joins with different units as well as automatically finding an optimal join. This means the labelling of the database (or diphones) need not be so accurate.

Given the targets costs, either as weighted distances (hunt96) or distances from the cluster centre for members of the most appropriate cluster (black97b), or longer units such as from PSM, and continuity costs the best selection of units from a database is the selection of units which minimises the total cost.

This can be found using Viterbi search with beam pruning.

After finding the best set of candidate units, we need to join the units. This is true for diphones or nphones or unit selection algorithms. We will also need to modify their pitch and duration (independently) to make the selection units match their targets. In the selection-based synthesis approach, as the selected targets may already be very close to the desired prosody sometime you may get away with no modification.

There are a number of techniques available to do modify pitch and duration with minimum distortion to the signal. Most of these techniques are pitch synchronous. That is they view the speech as a stream of short signals starting at the "burst" of a pitch mark to just before the next. In unvoiced speech some arbitrary section is chosen.

To modify duration without modifying pitch we delete (or duplicate) the pitch signals keeping the same distance between them

To modify the pitch we move the signals so they overlap (increase pitch) or become further apart (lower pitch). Usually the signal is windowed (hamming or similar) is done to reduce distortion at the edge.

The following techniques exist in Festival (one is in an external program)

Parameter.set

'Duration_Method 'Default). Its important that the voice sound

synthetic rather than bad.

(voice_french_mbrola) (SayText "bonjour")The above will work with no changes. Alternatively try to build a full text to speech synthesizer for Klingon. We have a set of Klingon diphones and you can probably find more information by search for "Klingon Language" on the web.

Go to the first, previous, next, last section, table of contents.